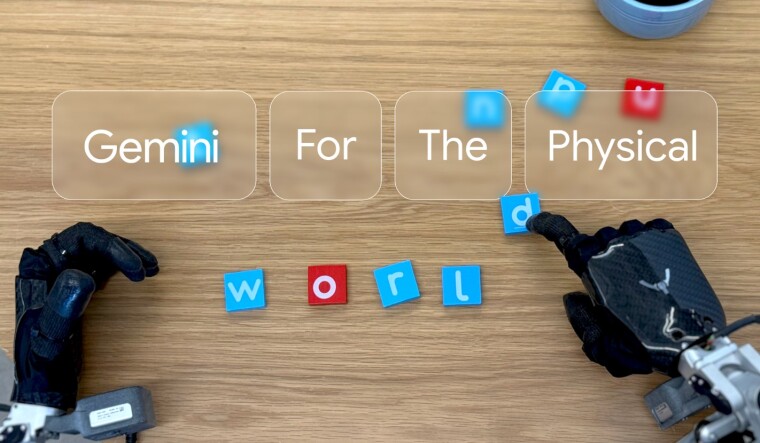

Google DeepMind has unveiled Gemini Robotics and Gemini Robotics-ER, two new AI models based on Gemini 2.0, designed to revolutionize robot control.

Gemini Robotics is a vision-language-action (VLA) model that extends Gemini 2.0 with the capability to output physical actions, enabling robots to understand and respond to novel situations. Google reports that Gemini Robotics doubles the performance of other VLA models on generalization benchmarks. Leveraging Gemini 2.0’s language understanding, it can interpret human commands across various languages.

The model demonstrates advanced dexterity, capable of handling complex, multi-step tasks such as origami folding and precise object manipulation, like placing a snack in a Ziploc bag.

Gemini Robotics-ER is a vision-language model focused on spatial reasoning, allowing roboticists to integrate it with existing robot controllers. It offers out-of-the-box control capabilities, including perception, state estimation, spatial understanding, planning, and code generation.

Google is collaborating with Apptronik to develop humanoid robots powered by Gemini 2.0. Additionally, partnerships with Agile Robots, Agility Robotics, Boston Dynamics, and Enchanted Tools are underway to further develop Gemini Robotics-ER.

These advancements from Google DeepMind aim to enhance robot capabilities in understanding and executing complex tasks, paving the way for broader robot integration in diverse environments.